You give it files. It runs a model on each one, then places them in a 3D space where similar things end up near each other.

On-device processing

MobileCLIP runs locally on your Mac's neural engine. Your files never touch a server. Works offline, no accounts needed.

Mix any file type

Drop images, text files, source code, and PDFs into the same space. The model understands all of them and places them relative to each other.

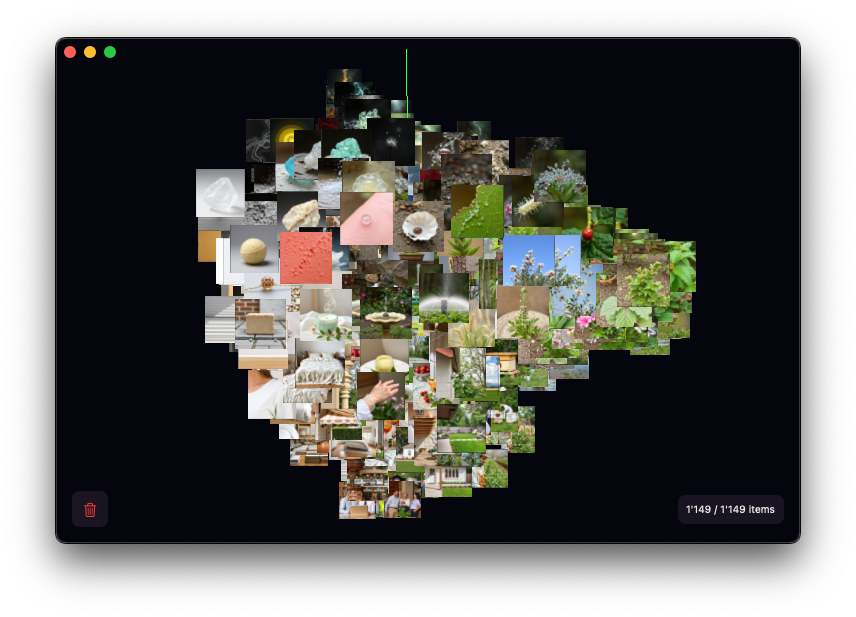

3D visualization

Embeddings are reduced to 3D and rendered with Metal. Rotate, zoom, and pan. It handles hundreds of items smoothly.

Drag and drop

Drop files onto the window. They show up in the visualization as embeddings are computed. No import wizard, no project setup.

Native macOS

SwiftUI app. Small download, fast to open, doesn't use much memory. Looks and behaves like the rest of your Mac.

Semantic clustering

Files with similar content end up near each other. Can be useful for spotting patterns, finding near-duplicates, or just getting an overview of a folder.